操作系统:

centos7.3

软件版本:

kubernetes 1.9

nginx-ingress-controller:0.10.2

defaultbackend:1.4

haproxy-ingress:latest

defaultbackend:1.0

目前kubernetes内置暴露访问的方案

介绍

目前kubernetes内置暴露访问的方案

1、nodeport

指定Service的端口类型为nodeport,它将在的host节点上面暴露出一个端口,kube-proxy同时也会监听这个端口,目前是通过kube-proxy调用iptables实现将流量负载均衡到不同的pod上去,外部访问pod是先到host-ip:port—->pod_ip:port.为了提高可用性,可以在集群前面在加个负载均衡器这样就变成 loadbalance_ip:port—->host_ip:port—->port_ip:port。

2、loadbalance

使用云平台本身提供的负载均衡器,分配访问pod的流量,这个目前只支持goole container engineand,目前也只支持tcp/udp负载均衡。

3、ingress

ingress就是可以暴露内部访问工作在7层的负载均衡器,根据域名或服务名对后端Service进行端口转发,并且能根据后端Service的变化,动态刷新配置。

为什么需要ingress?

因为如果Service使用nodeport暴露host的端口方式去访问应用的话,当Service有多个时,会造成端口管理上的混乱,在企业内部中还涉及到开防火墙问题。

这里我们介绍3种ingress类型,用户可以自由选择使用哪种类型:

- 1、nginx-ingress

- 2、haproxy-ingress

- 3、Traefik-ingress

ingress包含两大组件

ingress-controller

default-backend

ingress-controller本身是一个是一个pod,这个pod里面的容器安装了反向代理软件,通过读取添加的Service,动态生成负载均衡器的反向代理配置,你添加对应的ingress服务后,里面规则包含了对应的规则,里面有域名和对应的Service-backend

ingress-controller怎么去读取我们添加的ingress服务的配置然后动态刷新呢?

ingress-controller通过与kube-apiserver交互,去读取ingress服务规则的变化,通过读取ingress服务的规则,生成一段新的负载均衡器的配置然后重新reload,生配置生效。

default-backend

用来将未知请求全部负载到这个默认后端上,这个默认后端会返回 404 页面。

ingress-control有nginx类型的还有haproxy类型的和traefik类型。

部署一个nginx的ingress

需要这些yaml文件

namespace.yaml—————创建名为ingress-nginx命名空间

default-backend.yaml———–这是官方要求必须要给的默认后端,提供404页面的。它还提供了nginx-ingress-controll健康状态检查,通过每隔一定时间访问nginx-ingress-controll的/healthz页面,如是没有响应就返回404之类的错误码。

configmap.yaml ——- 创建名为nginx-configuration的configmap

tcp-services-configmap.yaml——–创建名为 tcp-services的configmap

udp-services-configmap.yaml——-创建名为udp-services的configmap

rbac.yaml——创建对应的Serviceaccount并绑定对应的角色

with-rbac.yaml—–创建带rbac的ingress-control

创建文件夹

mkdir /root/ingress

下载

1 | wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/namespace.yaml -P /root/ingress |

1 | wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/default-backend.yaml -P /root/ingress |

1 | wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/configmap.yaml -P /root/ingress |

1 | wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/tcp-services-configmap.yaml -P /root/ingress |

1 | wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/udp-services-configmap.yaml -P /root/ingress |

1 | wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/rbac.yaml -P /root/ingress |

1 | wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/with-rbac.yaml -P /root/ingress |

安装

1 | kubectl apply -f /root/ingress/ |

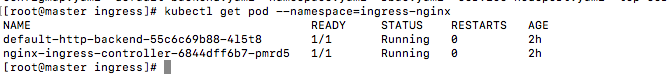

检查对应的pod是否创建好

1 | kubectl get pod --namespace=ingress-nginx |

因为新版本取消host模式的网络模式,所以需要我们创建server将ingress的端口映射出来

下载

1 | wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/provider/baremetal/service-nodeport.yaml -P /root/ingress |

1 | kubectl apply -f /root/ingress/service-nodeport.yaml |

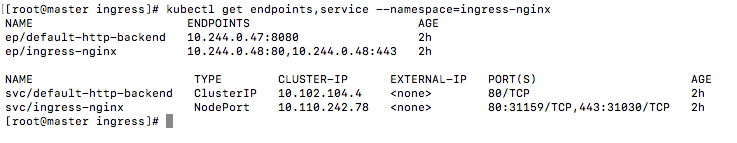

查看对应的enpoint和service

1 | kubectl get endpoints,service --namespace=ingress-nginx |

80被映射到宿主机的31159端口了443被映射到31030端口了。

部署应用测试

部署两个httpd

1 | cat httpd-app.yaml |

1 | apiVersion: apps/v1beta2 # for versions before 1.8.0 use apps/v1beta1 |

创建两个目录替换httpd的网页目录

1 | mkdir /root/httpd |

1 | mkdir /root/httpd2 |

简单写个测试页

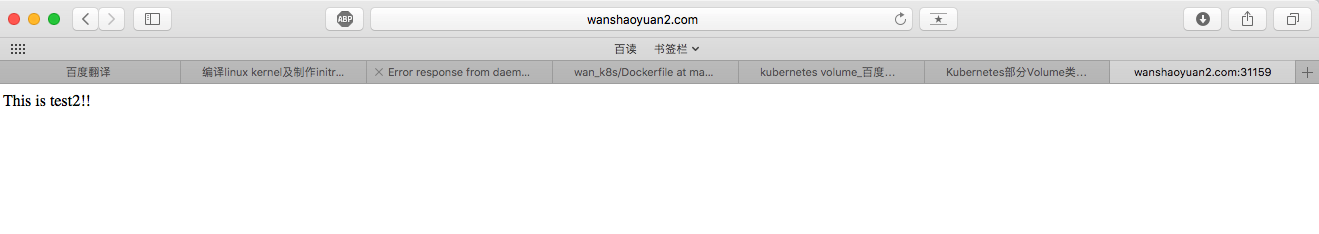

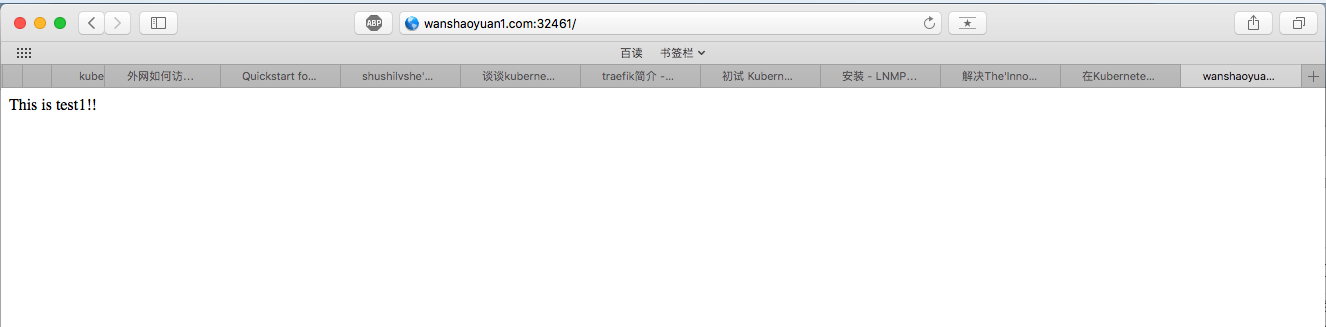

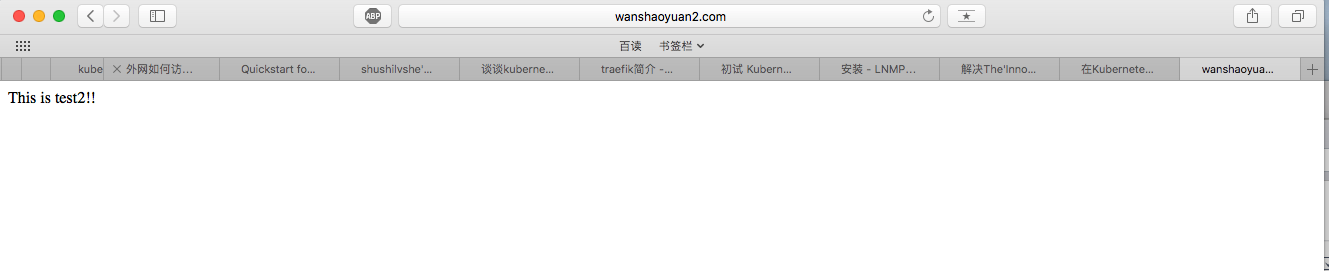

1 | echo This is test1!! > /root/httpd/index.html |

1 | echo This is test2!! > /root/httpd2/index.html |

创建应用

1 | kubectl apply -f httpd-app.yaml |

检查

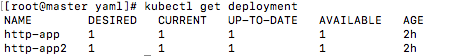

1 | kubectl get deployment |

创建对应的servic

1 | cat httpd_service.yaml |

1 | kind: Service |

创建service

1 | kubectl apply -f httpd_service.yaml |

检查

1 | kubectl get service |

创建ingress

1 | cat httpd-ingress.yaml |

1 | apiVersion: extensions/v1beta1 |

应用

1 | kubectl apply -f httpd-ingress.yaml |

检查

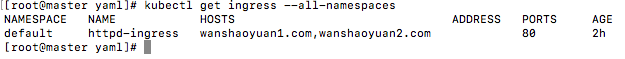

1 | kubectl get ingress --all-namespaces |

测试服务

修改hosts

打开浏览器访问

http://wanshaoyuan1.com:31159

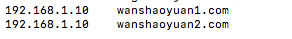

查看ingress-control生成的nginx.conf配置文件

1 | kubectl --namespace=ingress-nginx exec -it nginx-ingress-controller-756ffbdb79-5tjs5 cat /etc/nginx/nginx.conf |

可以看见ingress-control添加了这两个upstream

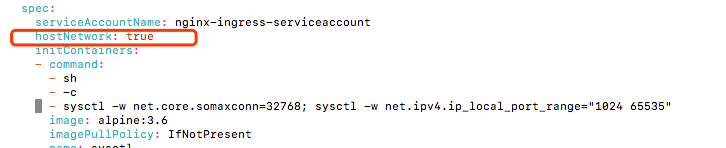

如果需要ingress-control不使用service暴露端口,网络模式使用主机模式的话需要修改with-rbac.yaml

部署一个haproxy的ingress

default-backend.yaml ——-这是官方要求必须要给的默认后端,提供404页面的。它还提供了haproxy-ingress-controll健康状态检查,通过每隔一定时间访问haproxy-ingress-controll的/healthz页面,如是没有响应就返回404之类的错误码。

haproxy-ingress.yaml—–部署haproxy-ingress-controller。

rbac.yaml—–创建rbac权限和Serviceaccount

github

https://github.com/jcmoraisjr/haproxy-ingress/tree/master/examples

如果你kubernetes配置了RBAC的验证模式,你需要创建个servicaccount,然后绑定相对应的role和clusterrole,否则你的ingress-control将无法从kube-apiserver获取对应的数据。

1 | cat rbam.yaml |

1 | apiVersion: v1 |

1 | cat default-backend.yaml |

1 | apiVersion: extensions/v1beta1 |

1 | cat haproxy-ingress.yaml |

1 | apiVersion: extensions/v1beta1 |

应用

1 | kubectl apply -f rbac.yaml |

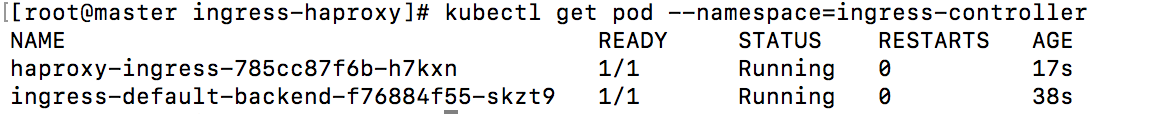

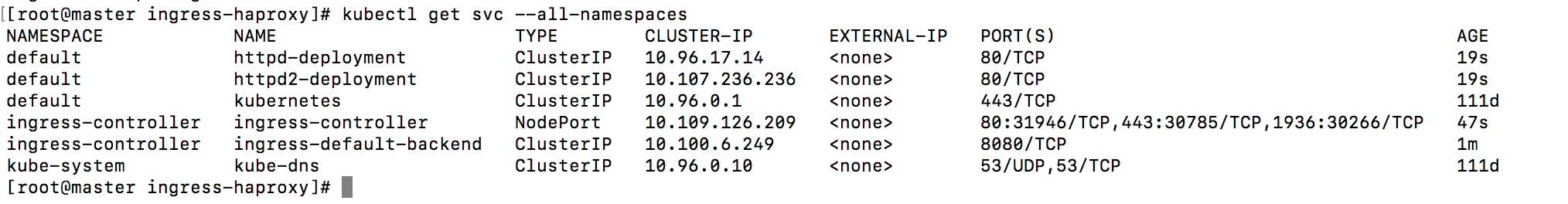

查看对应的pod

1 | kubectl get pod --namespace=ingress-controller |

创建应用测试,这里还是用上面的http-app

1 | kubectl apply -f httpd-app.yaml |

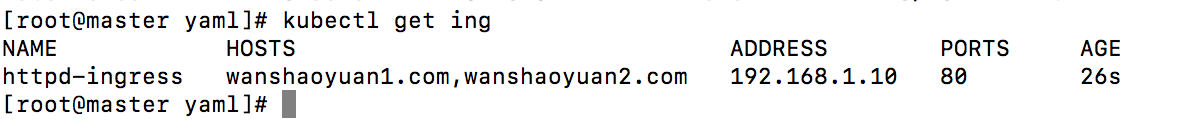

1 | kubectl get ing |

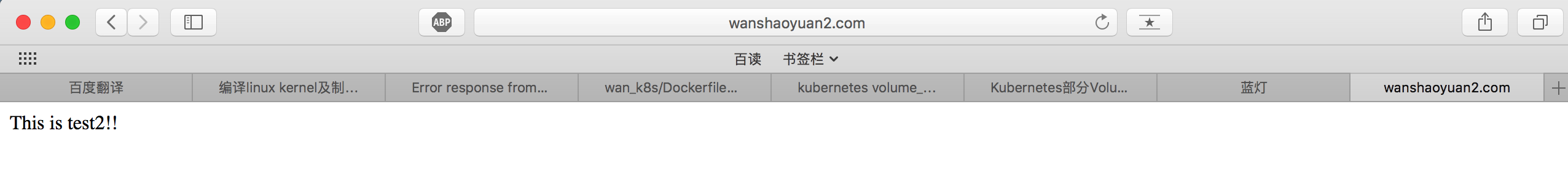

配置hosts访问

测试

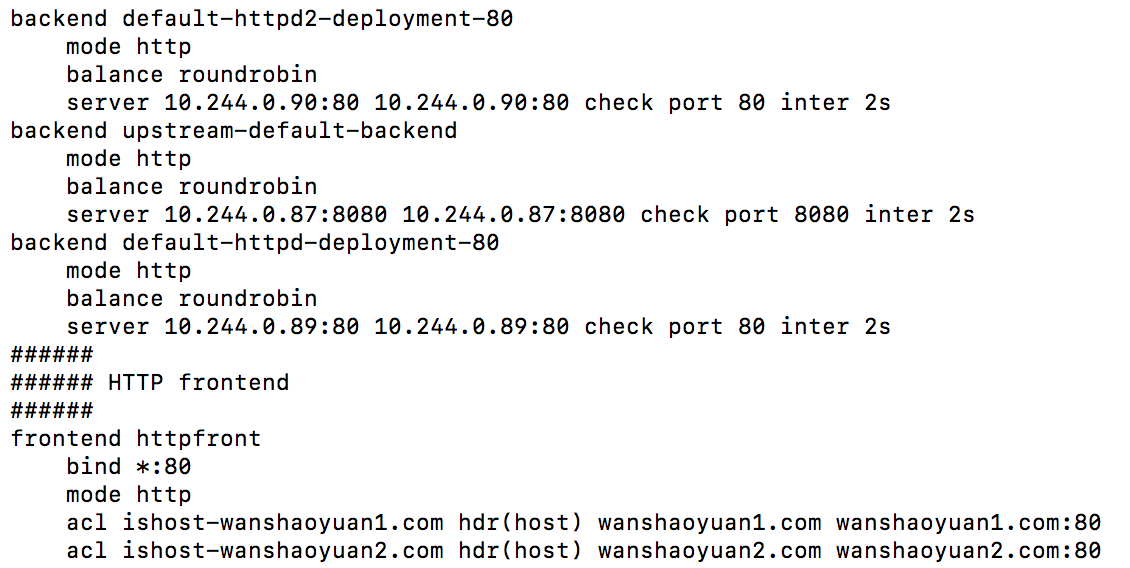

查看ingress-control的haproxy配置文件

1 | kubectl -n ingress-controller exec -it haproxy-ingress-785cc87f6b-h7kxn cat /etc/haproxy/haproxy.cfg |

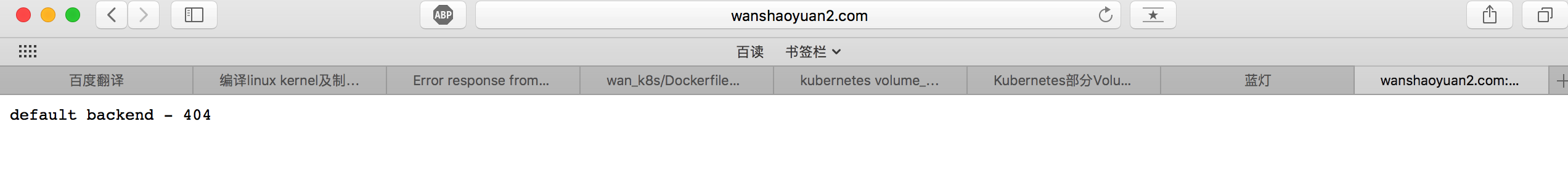

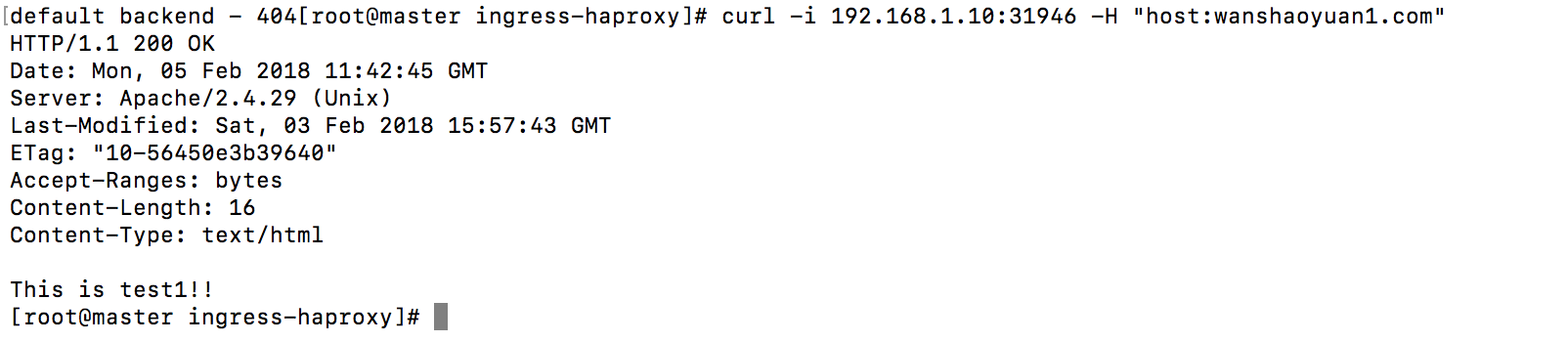

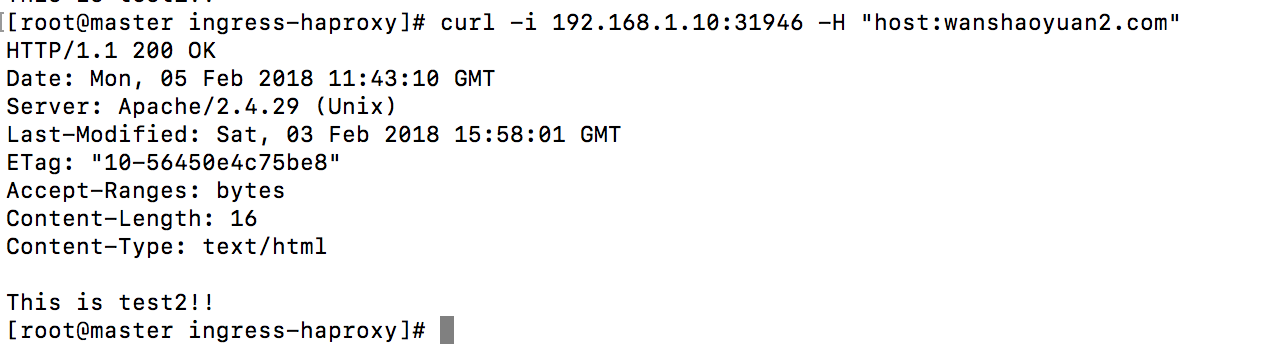

我ingress-control-haproxy使用的是hostnetwork模式,当我不使用hostnetwork模式时,我将ingress-control-haproxy使用service暴露nodeport端口去连接时会出现下面情况。

但使用curl指定http报文host字段头部

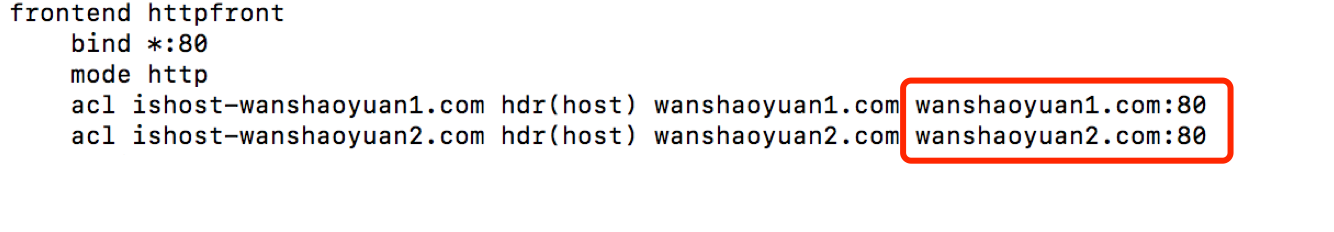

通过判断haproxy配置文件判断应该是haproxy.cfg里面的acl的配置

限制了报文头部host字段。

但这样使用的话还有个问题,因为ingress-control也是以pod的方式部署的,在实际应用中,pod会发生漂移,这样的话ip就会发生变化,但在企业中,允许通过的ip都需要在防火墙上放行。所以这里我们需要一个vip,使用haproxy+keepalive实现高可用,这里就不介绍具体操作方法了。

部署一个Traefik的ingress

tracfik是一款开源的反向代理和负载均衡工具,traefik是为微服务而生的,它可以随时感知后端服务的变化,自动更改配置并热重新加载,在这期间服务是不会暂停和停止的。

为什么选择traefik(http://blog.csdn.net/aixiaoyang168/article/details/78557739)

- 速度快

- 不需要安装其他依赖,使用 GO 语言编译可执行文件

- 支持最小化官方 Docker 镜像

支持多种后台,如 Docker, Swarm mode, Kubernetes, Marathon, Consul, Etcd, Rancher, Amazon ECS 等等 - 支持 REST API

- 配置文件热重载,不需要重启进程

- 支持自动熔断功能

- 支持轮训、负载均衡

- 提供简洁的 UI 界面

- 支持 Websocket, HTTP/2, GRPC

- 自动更新 HTTPS 证书

- 支持高可用集群模式

根上面的nginx-ingress和haproxy-ingress相比有什么区别呢 ?

1、使用nginx-ingress和haproxy-ingress需要一个ingress-controller去根kube-apiserver进行交互去读取后端的service、pod等的变化,然后在动态刷新nginx的配置,来达到服务自动发现的目的,而traefik本身设计就能直接和kube-apiserver进行交互,感知后端service、pod的变化,自动更新并热重载。

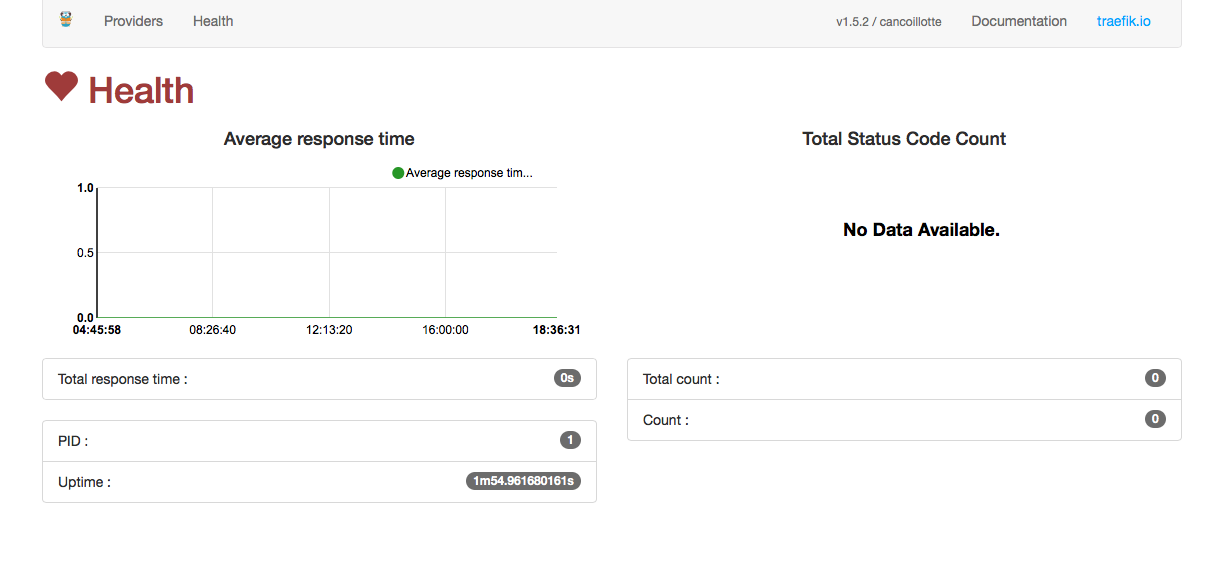

2、traefik快速和方便自带web页面和监控状态检查。

部署traefik

git clone https://github.com/containous/traefik.git

traefik/examples/k8s/目录存放的就是kubernetes集群所需要的yml文件

traefik-rbac.yaml对创建traefik对应的ClusterRole和绑定对应的servicaccount。

执行

kubectl apply -f traefik-rbac.yaml

traefik-deployment.yaml 创建对应的serviceaccount,创建对应的pod和service

执行

1 | kubectl apply -f traefik-deployment.yaml |

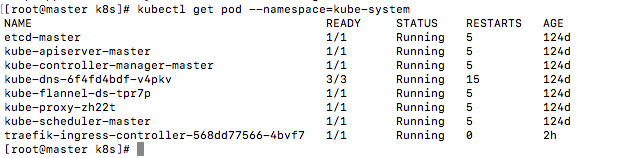

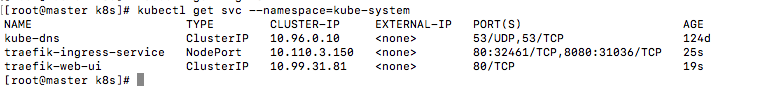

查看

它同时启动了80和8080端口,80端口对应服务端口,8080对应ui端口

访问

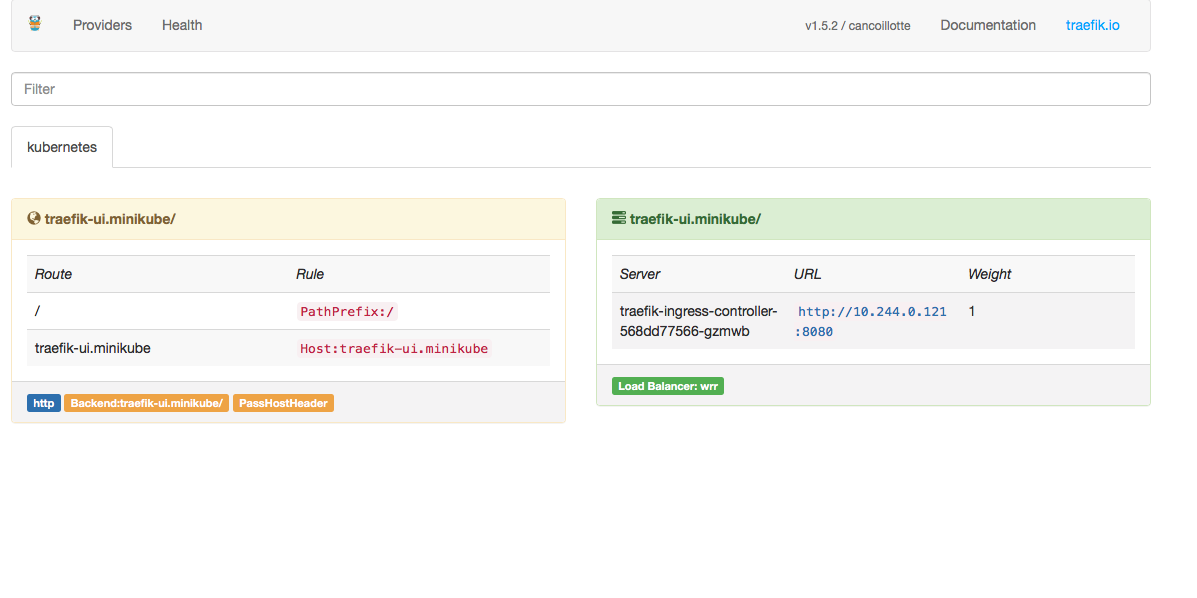

部署ui

主要采用ingress的方式暴露服务

traefix很快发现我们刚刚添加的ingress

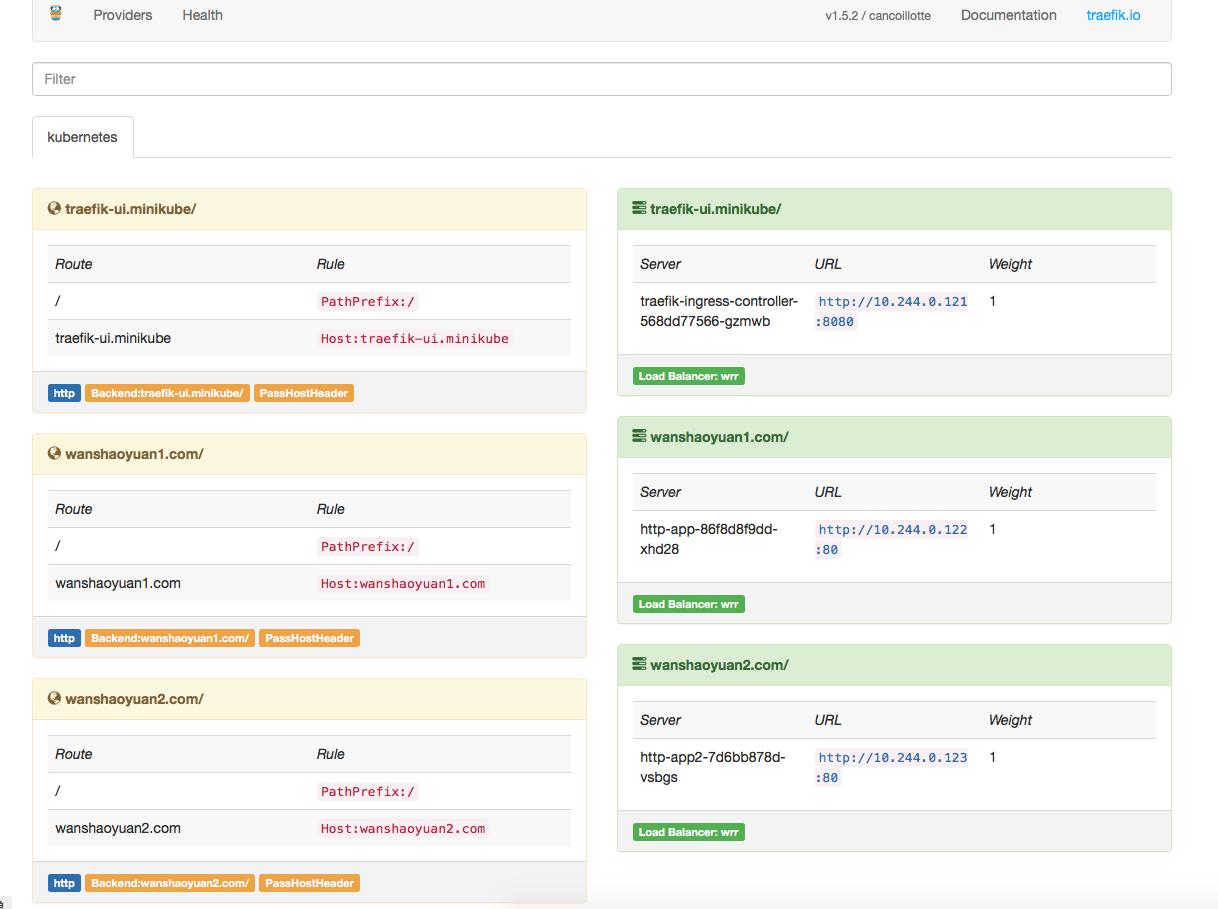

使用上面ingress-nginx和ingress-haproxy的测试用例进行测试

创建对应的deployment

1 | kubectl apply -f httpd-app.yaml |

创建对应的service

1 | kubectl apply -f httpd_service.yaml |

创建对应的ingress

1 | kubectl apply -f httpd-ingress.yaml |

测试访问

参考链接:

http://dockone.io/article/1418

http://blog.csdn.net/aixiaoyang168/article/details/78557739

http://www.mamicode.com/info-detail-2109270.html