使用ECK部署ElasticSearch到Kubernetes中

概述 通过operator对有状态应用可以快速进行部署,将ElasticSearch集群快速部署在Kubernetes集群上,并且可以通过operator快速对集群进行管理和扩容。

环境信息

软件

版本

Rancher

2.3.8-ent

Kubernetes

1.17.6

OS

centos7.6

ElasticSearch

7.9

ECK-Opera

1.2

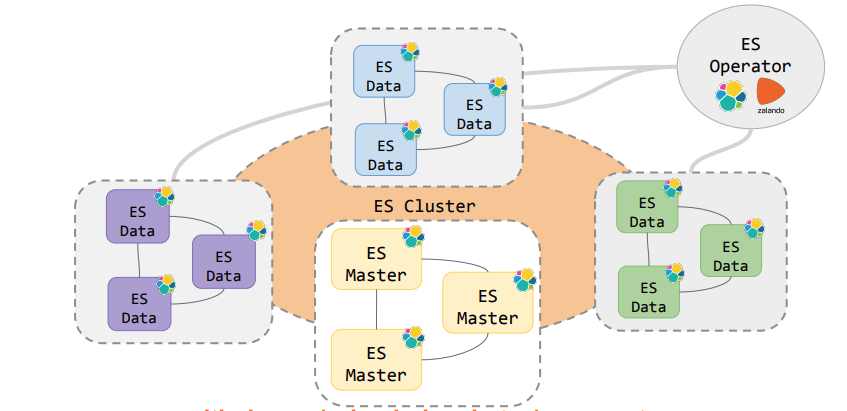

架构规划

详细部署 调整宿主机操作系统内核参数 限制一个进程可以拥有的VMA(虚拟内存区域)的数量要超过262144,不然elasticsearch会报max virtual memory areas vm.max_map_count [65535] is too low, increase to at least [262144]

1 echo "vm.max_map_count=655360">>/etc/sysctl.conf

执行生效

部署Local-pv 使用Rancher开源的local-path-provisioner驱动将节点本地存储用于存储ElasticSearch的数据

https://github.com/rancher/local-path-provisioner

https://www.bladewan.com/2018/05/19/kubernetes_storage/

部署es-operator 1 kubectl apply -f https://download.elastic.co/downloads/eck/1.2.1/all-in-one.yaml

检查es-operator状态

1 kubectl -n elastic-system logs -f statefulset.apps/elastic-operator

1 2 3 kubectl get pod/elastic-operator-0 -n elastic-system NAME READY STATUS RESTARTS AGE elastic-operator-0 1/1 Running 0 2d23h

部署ElasticSearch 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 cat <<EOF | kubectl apply -f - apiVersion: elasticsearch.k8s.elastic.co/v1 kind: Elasticsearch metadata: name: quickstart spec: version: 7.9.0 nodeSets: - name: master-nodes count: 3 podTemplate: spec: containers: - name: elasticsearch env: - name: ES_JAVA_OPTS value: -Xms2g -Xmx2g resources: requests: memory: 4Gi cpu: 0.5 limits: memory: 4Gi cpu: 2 image: docker.elastic.co/elasticsearch/elasticsearch:7.9.0 config: node.master: true node.data: false node.ingest: false volumeClaimTemplates: - metadata: name: elasticsearch-data spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: local-path - name: data-nodes count: 3 podTemplate: spec: containers: - name: elasticsearch env: - name: ES_JAVA_OPTS value: -Xms2g -Xmx2g resources: requests: memory: 4Gi cpu: 0.5 limits: memory: 4Gi cpu: 2 image: docker.elastic.co/elasticsearch/elasticsearch:7.9.0 config: node.master: false node.data: true node.ingest: false volumeClaimTemplates: - metadata: name: elasticsearch-data spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: local-path EOF

注意点:

查看部署状态

1 2 3 kubectl get elasticsearch NAME HEALTH NODES VERSION PHASE AGE quickstart green 6 7.9.0 Ready 41m

查看部署的POD状态信息,部署了3个Master+3个Data节点

1 2 3 4 5 6 7 8 kubectl get pod NAME READY STATUS RESTARTS AGE quickstart-es-data-nodes-0 1/1 Running 0 10m quickstart-es-data-nodes-1 1/1 Running 0 12m quickstart-es-data-nodes-2 1/1 Running 0 14m quickstart-es-master-nodes-0 1/1 Running 0 5m57s quickstart-es-master-nodes-1 1/1 Running 0 7m23s quickstart-es-master-nodes-2 1/1 Running 0 8m36s

默认开启了x-pack插件有认证系统,帐户默认为elastic,密码保存在secret密钥中

获取访问密钥

1 2 PASSWORD=$(kubectl get secret quickstart-es-elastic-user -o go-template='{{.data.elastic | base64decode}}')

使用NodePort方式对外暴露

1 kubectl patch svc quickstart-es-http -p '{"spec": {"type": "NodePort"}}'

访问ElasticSearch

查看集群状态

1 2 3 4 curl --insecure -u "elastic:$PASSWORD" -k https://node_ip:NodePort/_cat/health?v epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent 1599476283 10:58:03 quickstart green 6 3 0 0 0 0 0 0 - 100.0%

查看集群节点信息

1 2 3 4 5 6 7 8 9 curl --insecure -u "elastic:$PASSWORD" -k https://172.16.1.6:30620/_cat/nodes?v ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name 10.42.1.92 27 58 16 2.97 2.15 1.66 dlrt - quickstart-es-data-nodes-1 10.42.4.15 8 58 15 2.00 1.03 0.95 lmr - quickstart-es-master-nodes-0 10.42.0.41 5 58 15 1.88 2.82 2.92 dlrt - quickstart-es-data-nodes-0 10.42.2.183 35 58 6 0.12 0.09 0.09 lmr * quickstart-es-master-nodes-2 10.42.5.8 8 58 12 0.85 0.45 0.34 dlrt - quickstart-es-data-nodes-2 10.42.3.20 56 58 8 0.05 0.18 0.38 lmr - quickstart-es-master-nodes-1

角色说明:

d (data node)

master处带*表示为3个master节点中选举出来的leader节点。

部署Kibana 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cat <<EOF | kubectl apply -f - apiVersion: kibana.k8s.elastic.co/v1 kind: Kibana metadata: name: quickstart spec: version: 7.9.1 count: 1 elasticsearchRef: name: quickstart podTemplate: spec: containers: - name: kibana resources: requests: memory: 1Gi cpu: 0.5 limits: memory: 2Gi cpu: 2 image: docker.elastic.co/kibana/kibana:7.9.1 EOF

检查kibana部署状态

1 2 3 4 kubectl get kibana NAME HEALTH NODES VERSION AGE quickstart green 7.9.1 6m21s

使用NodePort对外暴露

1 kubectl patch svc quickstart-kb-http -p '{"spec": {"type": "NodePort"}}'

获取密码,默认情况下kibana的密码也是存储在secret中

1 kubectl get secret quickstart-es-elastic-user -o=jsonpath='{.data.elastic}' | base64 --decode; echo

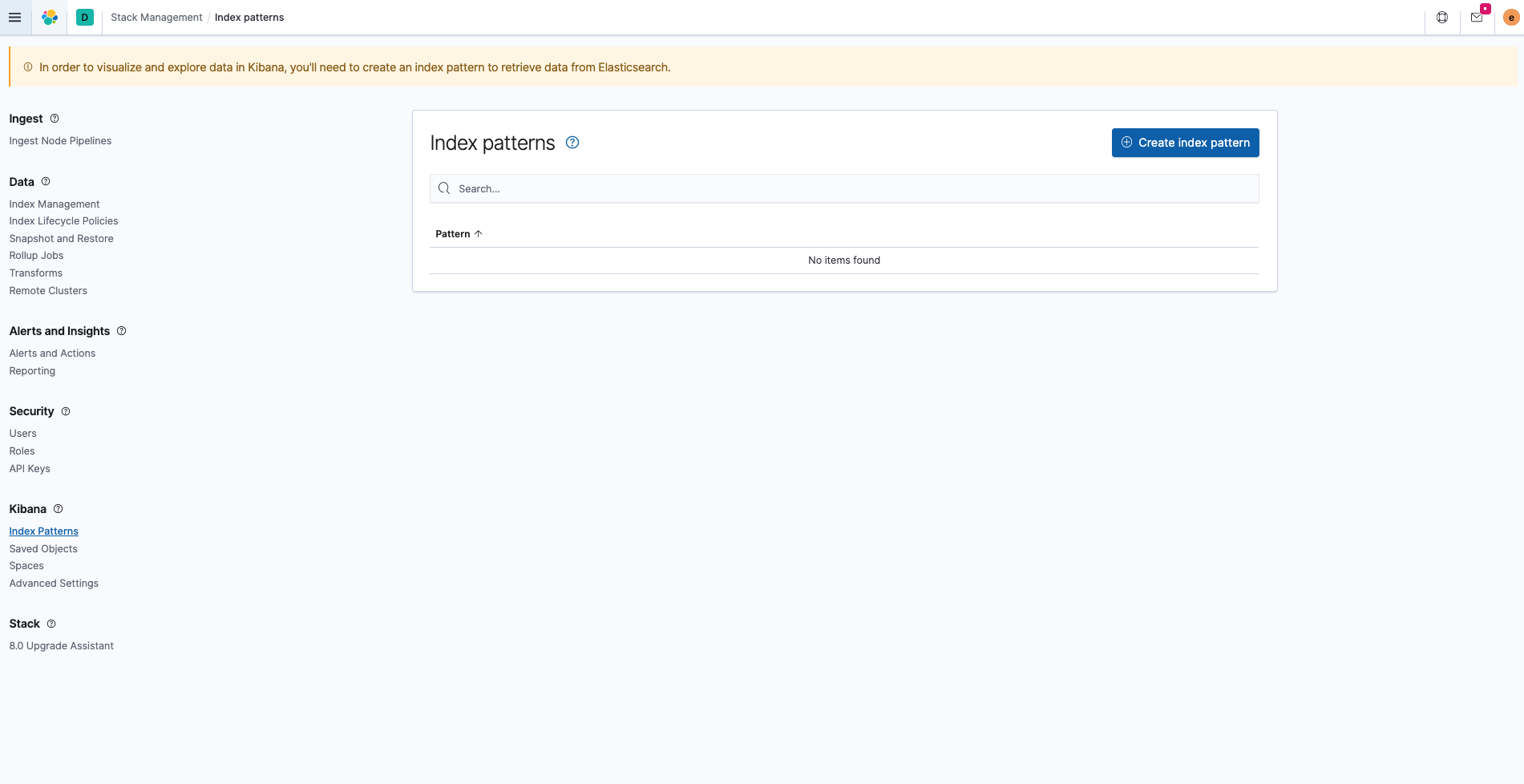

通过https://host_ip:nodeport访问kibana

帐号为elastic,密码为刚刚通过secret获取的密码

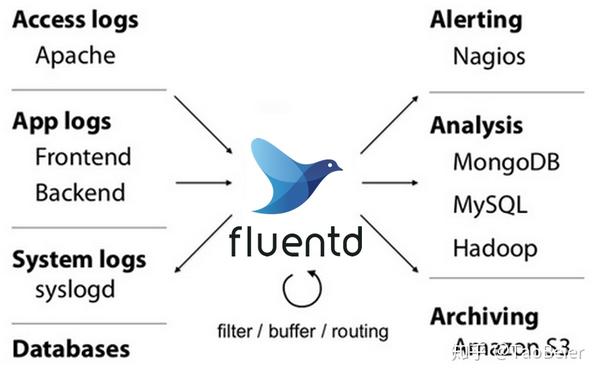

部署Fluentd 部署Fluentd

优点:

1、插件生态丰富,可以使用目前社区比较丰富的插件避免重复造轮子。

2、插件使用Ruby语言自定义编写方便

缺点:

部署Fluentd

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 --- apiVersion: v1 kind: ServiceAccount metadata: name: fluentd namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: fluentd namespace: kube-system rules: - apiGroups: - "" resources: - pods - namespaces verbs: - get - list - watch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: fluentd roleRef: kind: ClusterRole name: fluentd apiGroup: rbac.authorization.k8s.io subjects: - kind: ServiceAccount name: fluentd namespace: kube-system --- apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd namespace: kube-system labels: k8s-app: fluentd-logging version: v1 spec: selector: matchLabels: k8s-app: fluentd-logging version: v1 template: metadata: labels: k8s-app: fluentd-logging version: v1 spec: serviceAccount: fluentd serviceAccountName: fluentd tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule containers: - name: fluentd image: fluent/fluentd-kubernetes-daemonset:v1-debian-elasticsearch env: - name: FLUENT_ELASTICSEARCH_HOST value: "10.43.7.26" - name: FLUENT_ELASTICSEARCH_PORT value: "9200" - name: FLUENT_ELASTICSEARCH_SCHEME value: "https" # Option to configure elasticsearch plugin with self signed certs # ================================================================ - name: FLUENT_ELASTICSEARCH_SSL_VERIFY value: "false" # Option to configure elasticsearch plugin with tls # ================================================================ - name: FLUENT_ELASTICSEARCH_SSL_VERSION value: "TLSv1_2" # X-Pack Authentication # ===================== - name: FLUENT_ELASTICSEARCH_USER value: "elastic" - name: FLUENT_ELASTICSEARCH_PASSWORD value: "g38SlQ408i5SI6E24SoqdE3N" resources: limits: memory: 200Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers

注意将以下几个环境变量改为实际得ElasticSearch地址和配置帐号

1 2 3 4 5 6 7 8 9 FLUENT_ELASTICSEARCH_HOST #修改为实际的ElasticSearch地址 FLUENT_ELASTICSEARCH_PORT #修改为实际的ElasticSearch的端口 FLUENT_ELASTICSEARCH_SSL_VERIFY #因为采用的是自签名证书,所以这里关闭SSL认证 FLUENT_ELASTICSEARCH_USER #连接的x-pack的帐号 FLUENT_ELASTICSEARCH_PASSWORD #连接的x-pack的密码

采用Daemonset方式进行部署,部署后会将主机的/var/log目录映射到了容器内,在fluentd内配置文件对应的/fluentd/etc/kubernetes.conf文件中,tail插件对/var/log/container/目录的*.log文件进行收集,此目录存储的是对应的容器标准输出的日志文件的软链接。

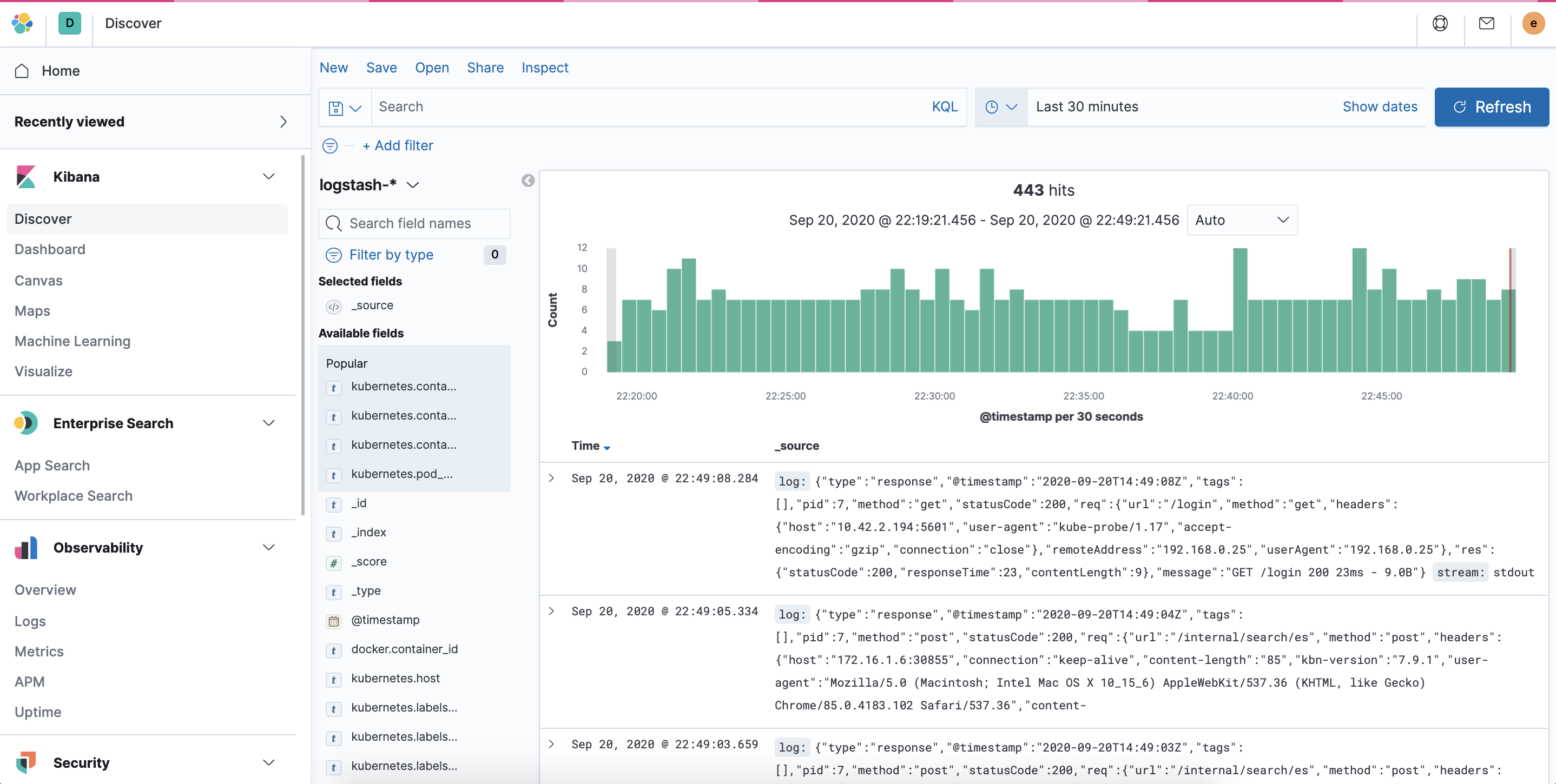

配置kibana,选择对应的Index,查看