概述

calico网络插件最为知名的就是calico-bgp模式,在测试中需要验证calico-bgp跨子网路由同步,需要连接两个子网的路由器支持BGP协议,这给测试环境搭建带来很大复杂性。本次文档通过Bird软件将一个虚拟机模拟为软路由,并配置为Kubernetes节点BGP Peers,实现BGP路由同步。

软件版本

| 软件 | 版本 |

|---|---|

| Kubernetes | v1.20.15 |

| calico | v3.17.2 |

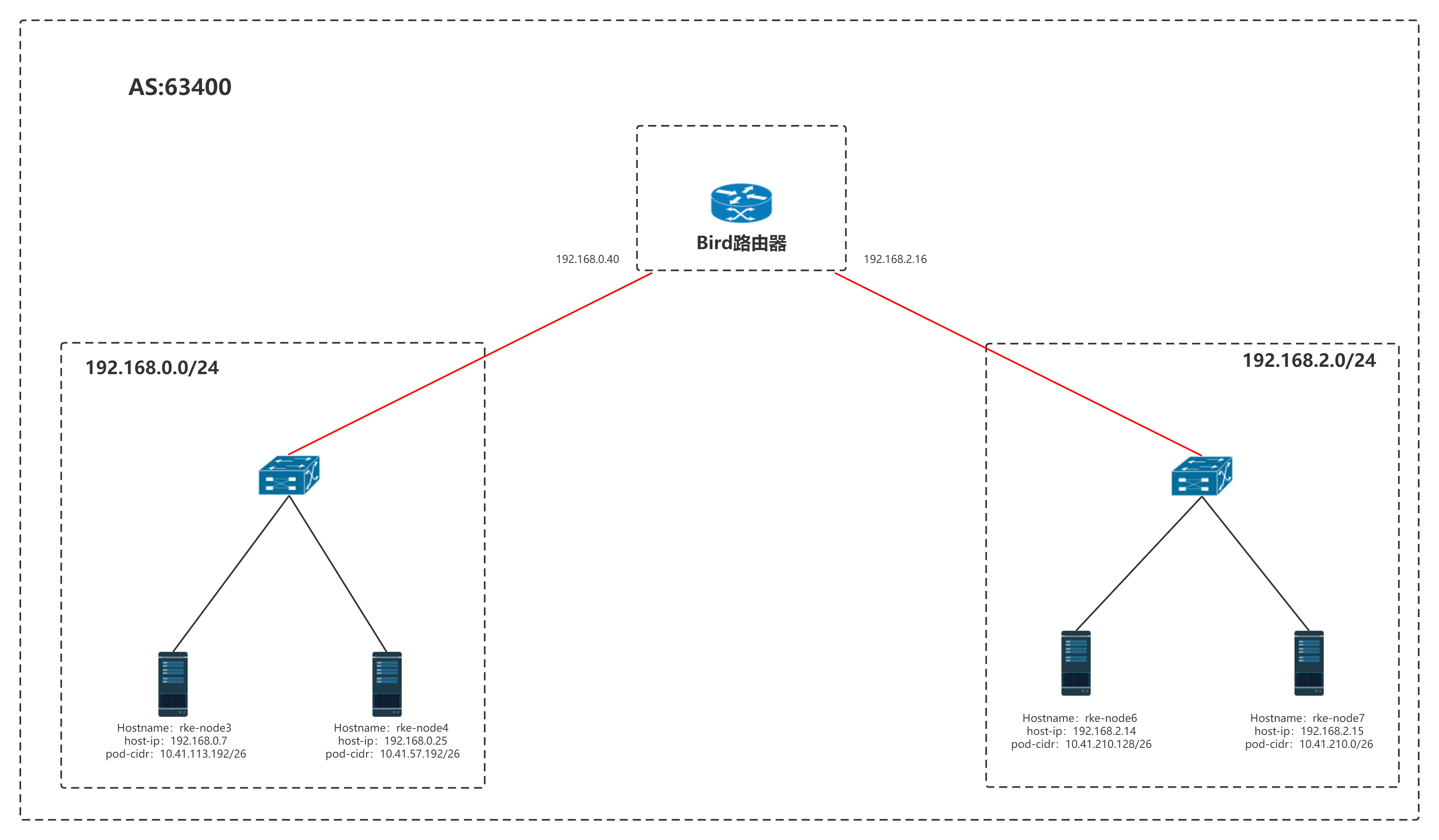

拓扑架构图

Hostname:rke-node3

host-ip:192.168.0.7

pod-cidr:10.41.113.192/26

Hostname:rke-node4

host-ip:192.168.0.25

pod-cidr:10.41.57.192/26

Hostname:rke-node6

host-ip:192.168.2.14

pod-cidr:10.41.210.128/26

Hostname:rke-node7

host-ip:192.168.2.15

pod-cidr:10.41.210.0/26

kubernetes 节点分布在两个子网,中间通过一台vm连接了两个子网,在vm上部署bird软路由进行两个子网通信,同属一个AS自治域。

注意:如果底层是OpenStack环境需要关闭网卡安全组。

Bird部署配置

节点配置

Bird节点采用一台VM部署,操作系统采用Centos7.6,将此节点作为软路由需要确保以下功能开启。

内核forward转发

sysctl -a|grep "net.ipv4.ip_forward = 1"

net.ipv4.ip_forward = 1

iptables数据包转发

iptables -P FORWARD ACCEPT

需要互相联通的节点上需要配置互访的静态路由

如在192.168.0.0/24的节点上配置

ip route add 192.168.2.0/24 via 192.168.0.40 dev ens3

如在192.168.2.0/24的节点上配置

ip route add 192.168.0.0/24 via 192.168.2.16 dev ens3

验证互访,在192.168.0.0/24主机ping 192.168.2.0/24主机

Bird配置

Bird配置文件

1 | mkdir /bird/ |

router id 192.168.0.40;

filter calico_export_to_bgp_peers {

if ( net ~ 10.41.0.0/16 ) then {

accept;

}

if ( net ~ 10.42.0.0/16 ) then {

accept;

}

reject;

}

filter calico_kernel_programming {

if ( net ~ 10.41.0.0/16 ) then {

accept;

}

if ( net ~ 10.42.0.0/16 ) then {

accept;

}

accept;

}

# Configure synchronization between routing tables and kernel.

protocol kernel {

learn; # Learn all alien routes from the kernel

persist; # Don't remove routes on bird shutdown

scan time 2; # Scan kernel routing table every 2 seconds

import all;

export filter calico_kernel_programming; # Default is export none

graceful restart; # Turn on graceful restart to reduce potential flaps in

# routes when reloading BIRD configuration. With a full

# automatic mesh, there is no way to prevent BGP from

# flapping since multiple nodes update their BGP

# configuration at the same time, GR is not guaranteed to

# work correctly in this scenario.

merge paths on; # Allow export multipath routes (ECMP)

}

protocol device {

debug { states };

scan time 2; # Scan interfaces every 2 seconds

}

protocol direct {

debug { states };

interface -"cali*", -"kube-ipvs*", "*"; # Exclude cali* and kube-ipvs* but

# include everything else. In

# IPVS-mode, kube-proxy creates a

# kube-ipvs0 interface. We exclude

# kube-ipvs0 because this interface

# gets an address for every in use

# cluster IP. We use static routes

# for when we legitimately want to

# export cluster IPs.

}

# Template for all BGP clients

template bgp bgp_template {

debug { states };

description "Connection to BGP peer";

local as 63400;

multihop;

gateway recursive; # This should be the default, but just in case.

import all; # Import all routes, since we don't know what the upstream

# topology is and therefore have to trust the ToR/RR.

export filter calico_export_to_bgp_peers; # Only want to export routes for workloads.

source address 192.168.0.40; # The local address we use for the TCP connection

add paths on;

graceful restart; # See comment in kernel section about graceful restart.

connect delay time 2;

connect retry time 5;

error wait time 5,30;

}

protocol bgp Node_192_168_0_25 from bgp_template {

rr client;

neighbor 192.168.0.25 as 63400;

}

protocol bgp Node_192_168_0_7 from bgp_template {

rr client;

neighbor 192.168.0.7 as 63400;

}

protocol bgp Node_192_168_2_14 from bgp_template {

rr client;

neighbor 192.168.2.14 as 63400;

}

protocol bgp Node_192_168_2_15 from bgp_template {

rr client;

neighbor 192.168.2.15 as 63400;

}

将配置文件中的route-id、pod-cidr、neighbor-ip、as_number修改为实际需要建立bgp邻居的节点ip。

为了方便部署,本次bird使用Docker启动,启动命令如下:

docker run -itd --net=host --uts=host --cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW -v /bird/:/etc/bird:ro ibhde/bird4

检查启动状态是否为up

docker ps -a

Calico BGP对接

全部节点上安装calicoctl

wget https://github.com/projectcalico/calicoctl/releases/download/v3.17.4/calicoctl-linux-amd64

mv calicoctl-linux-amd64 /usr/bin/calicoctl

chmod a+x /usr/bin/calicoctl

关闭全局full-mesh

cat <<EOF | calicoctl apply -f -

apiVersion: projectcalico.org/v3

kind: BGPConfiguration

metadata:

name: default

spec:

logSeverityScreen: Info

nodeToNodeMeshEnabled: false

asNumber: 63400

EOF

配置节点label

这里将两组节点打上不同标签,将192.168.2.0/24节点打上rack=rack-1标签,连接192.168.2.16 bpg-peers,将192.168.0.0/24打上rack-rack-2标签,连接192.168.0.40 bgp-peers

kubectl label nodes rke-node3 rack=rack-2

kubectl label nodes rke-node4 rack=rack-2

kubectl label nodes rke-node5 rack=rack-1

kubectl label nodes rke-node5 rack=rack-1

使用caliclctl配置BGP Peers

cat <<EOF | calicoctl apply -f -

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: rack1-tor

spec:

peerIP: 192.168.2.16

asNumber: 63400

nodeSelector: rack == 'rack-1'

EOF

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: rack2-tor

spec:

peerIP: 192.168.0.40

asNumber: 63400

nodeSelector: rack == 'rack-2'

检查与BGP Peers连接情况

在rack=rack-2标签节点执行,应显示已经与192.168.0.40 bgp-peers建立连接

calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+---------------+-------+------------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+---------------+-------+------------+-------------+

| 192.168.0.40 | node specific | up | 2022-03-18 | Established |

+--------------+---------------+-------+------------+-------------+

IPv6 BGP status

No IPv6 peers found.

在rack=rack-1标签节点执行,应显示已经与192.168.2.16 bgp-peers建立连接

calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+---------------+-------+------------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+---------------+-------+------------+-------------+

| 192.168.2.16 | node specific | up | 2022-03-18 | Established |

+--------------+---------------+-------+------------+-------------+

IPv6 BGP status

No IPv6 peers found.

创建pod,验证路由同步

kubectl create deployment test --image=nginx --replicas=5

在5副本中,互相进行ping操作。验证跨节点网络是否正常。

在bird节点查看路由学习

ip route

default via 192.168.2.1 dev eth0

10.41.210.0/26 via 192.168.2.15 dev eth0 proto bird

10.42.57.192/26 via 192.168.0.25 dev eth1 proto bird

10.42.113.192/26 via 192.168.0.7 dev eth1 proto bird

10.42.210.128/26 via 192.168.2.14 dev eth0 proto bird

192.168.0.0/24 dev eth1 proto kernel scope link src 192.168.0.40

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.16

可以看见bird将集群内每个节点的pod-cidr都学习过来了。

在任意一个node节点上查看路由,以192.168.0.3节点为例,可以看见节点上也拥有集群全部pod-cidr路由信息。

ip route

default via 192.168.0.1 dev ens3 proto dhcp src 192.168.0.7 metric 100

10.41.57.192/26 via 192.168.0.25 dev ens3 proto bird

blackhole 10.41.113.192/26 proto bird

10.41.210.0/26 via 192.168.0.40 dev ens3 proto bird

10.41.210.128/26 via 192.168.0.40 dev ens3 proto bird

10.42.57.192/26 via 192.168.0.25 dev ens3 proto bird

192.168.0.0/24 dev ens3 proto kernel scope link src 192.168.0.7

192.168.2.0/24 via 192.168.0.40 dev ens3

节点POD-CIDR路由统一走默认路由

当前路由同步会将每个节点pod-cidr同步到集群中的节点上,对于Kubernetes集群规模大情况下会造成路由条目增多。可以通过下发默认路由方式,将节点全部流量请求都都指向bird 软路由节点。这样还有一个好处就是,在一些硬件SDN设备中可以实现流量监控。但需要注意的是路由器本身能承载的流量。

以bird配置为例

router id 192.168.0.40;

protocol static {

route 10.41.0.0/16 via 192.168.0.40;

route 10.42.0.0/16 via 192.168.0.40;

}

filter calico_export_to_bgp_peers {

if ( net ~ 10.41.0.0/16 ) then {

accept;

}

if ( net ~ 10.42.0.0/16 ) then {

accept;

}

reject;

}

filter calico_kernel_programming {

if ( net ~ 10.41.0.0/16 ) then {

accept;

}

if ( net ~ 10.42.0.0/16 ) then {

accept;

}

accept;

}

# Configure synchronization between routing tables and kernel.

protocol kernel {

learn; # Learn all alien routes from the kernel

persist; # Don't remove routes on bird shutdown

scan time 2; # Scan kernel routing table every 2 seconds

import all;

export filter calico_kernel_programming; # Default is export none

graceful restart; # Turn on graceful restart to reduce potential flaps in

# routes when reloading BIRD configuration. With a full

# automatic mesh, there is no way to prevent BGP from

# flapping since multiple nodes update their BGP

# configuration at the same time, GR is not guaranteed to

# work correctly in this scenario.

merge paths on; # Allow export multipath routes (ECMP)

}

protocol device {

debug { states };

scan time 2; # Scan interfaces every 2 seconds

}

protocol direct {

debug { states };

interface -"cali*", -"kube-ipvs*", "*"; # Exclude cali* and kube-ipvs* but

# include everything else. In

# IPVS-mode, kube-proxy creates a

# kube-ipvs0 interface. We exclude

# kube-ipvs0 because this interface

# gets an address for every in use

# cluster IP. We use static routes

# for when we legitimately want to

# export cluster IPs.

}

# Template for all BGP clients

template bgp bgp_template {

debug { states };

description "Connection to BGP peer";

local as 63400;

multihop;

gateway recursive; # This should be the default, but just in case.

import all; # Import all routes, since we don't know what the upstream

# topology is and therefore have to trust the ToR/RR.

export filter calico_export_to_bgp_peers; # Only want to export routes for workloads.

source address 192.168.0.40; # The local address we use for the TCP connection

add paths on;

graceful restart; # See comment in kernel section about graceful restart.

connect delay time 2;

connect retry time 5;

error wait time 5,30;

}

protocol bgp Node_192_168_0_25 from bgp_template {

neighbor 192.168.0.25 as 63400;

}

protocol bgp Node_192_168_0_7 from bgp_template {

neighbor 192.168.0.7 as 63400;

}

protocol bgp Node_192_168_2_14 from bgp_template {

neighbor 192.168.2.14 as 63400;

}

protocol bgp Node_192_168_2_15 from bgp_template {

neighbor 192.168.2.15 as 63400;

}

将neighbor配置中的 rr client删除,同时添加静态路由下发配置

protocol static {

route 10.41.0.0/16 via 192.168.0.40;

route 10.42.0.0/16 via 192.168.0.40;

}

在192.168.0.0/24的主机上看见路由情况如下:

ip route

default via 192.168.0.1 dev ens3 proto dhcp src 192.168.0.7 metric 100

10.41.0.0/16 via 192.168.0.40 dev ens3 proto bird

blackhole 10.41.113.192/26 proto bird

10.42.0.0/16 via 192.168.0.40 dev ens3 proto bird

可以看见pod-cidr的流量都被发送到Bird虚拟路由器192.168.0.40接口

在192.168.2.0/24的主机上看见路由情况如下:

ip route

default via 192.168.2.1 dev ens7

10.41.0.0/16 via 192.168.2.16 dev ens7 proto bird

blackhole 10.41.210.128/26 proto bird

10.42.0.0/16 via 192.168.2.16 dev ens7 proto bird

可以看见pod-cidr的流量都被发送到Bird虚拟路由器192.168.2.16接口

节点POD-IP明细路由发布

在实际使用中若期望将calico-pod明细路由发布到BGP路由器中,则需要修改每个节点的calico配置文件

修改方法如下

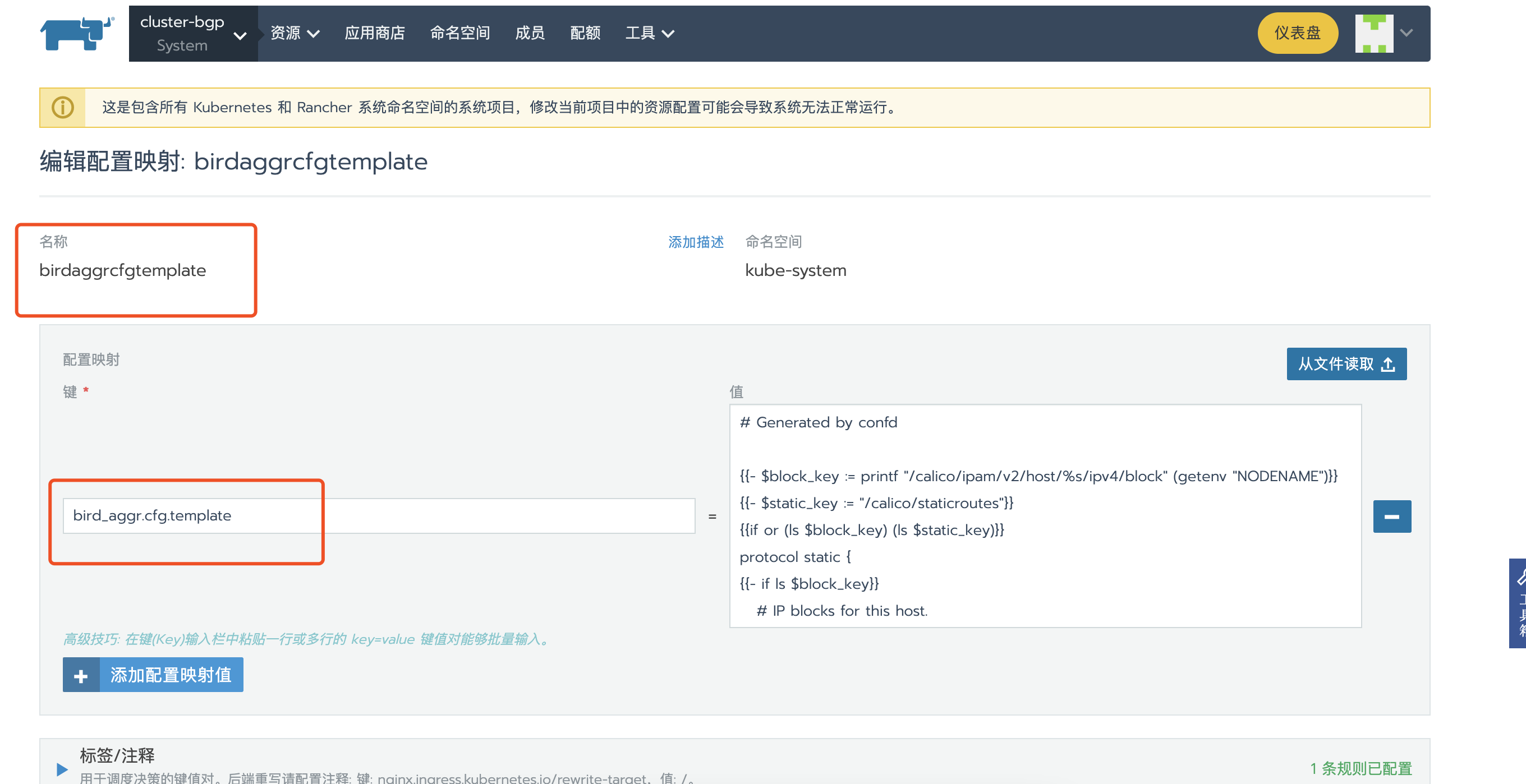

创建configmap,替换calico原有的bird_aggr.cfg.template文件

主要修改以下参数:

注释掉本地黑洞路由,就不会生产本地聚合路由同步到BGP路由器了。

1 | # route {{$cidr}} blackhole; |

允许明细路由同步

将if ( net ~ {{$cidr}} ) then { reject; } 修改为accept

完整配置如下:

# Generated by confd

{{- $block_key := printf "/calico/ipam/v2/host/%s/ipv4/block" (getenv "NODENAME")}}

{{- $static_key := "/calico/staticroutes"}}

{{if or (ls $block_key) (ls $static_key)}}

protocol static {

{{- if ls $block_key}}

# IP blocks for this host.

{{- range ls $block_key}}

{{- $parts := split . "-"}}

{{- $cidr := join $parts "/"}}

# route {{$cidr}} blackhole;

{{- end}}

{{- end}}

{{- if ls $static_key}}

# Static routes.

{{- range ls $static_key}}

{{- $parts := split . "-"}}

{{- $cidr := join $parts "/"}}

# route {{$cidr}} blackhole;

{{- end}}

{{- end}}

}

{{else}}# No IP blocks or static routes for this host.{{end}}

# Aggregation of routes on this host; export the block, nothing beneath it.

function calico_aggr ()

{

{{- range ls $block_key}}

{{- $parts := split . "-"}}

{{- $cidr := join $parts "/"}}

{{- $affinity := json (getv (printf "%s/%s" $block_key .))}}

{{- if $affinity.state}}

# Block {{$cidr}} is {{$affinity.state}}

{{- if eq $affinity.state "confirmed"}}

if ( net = {{$cidr}} ) then { accept; }

if ( net ~ {{$cidr}} ) then { accept; }

{{- end}}

{{- else }}

# Block {{$cidr}} is implicitly confirmed.

if ( net = {{$cidr}} ) then { accept; }

if ( net ~ {{$cidr}} ) then { accept; }

{{- end }}

{{- end}}

}

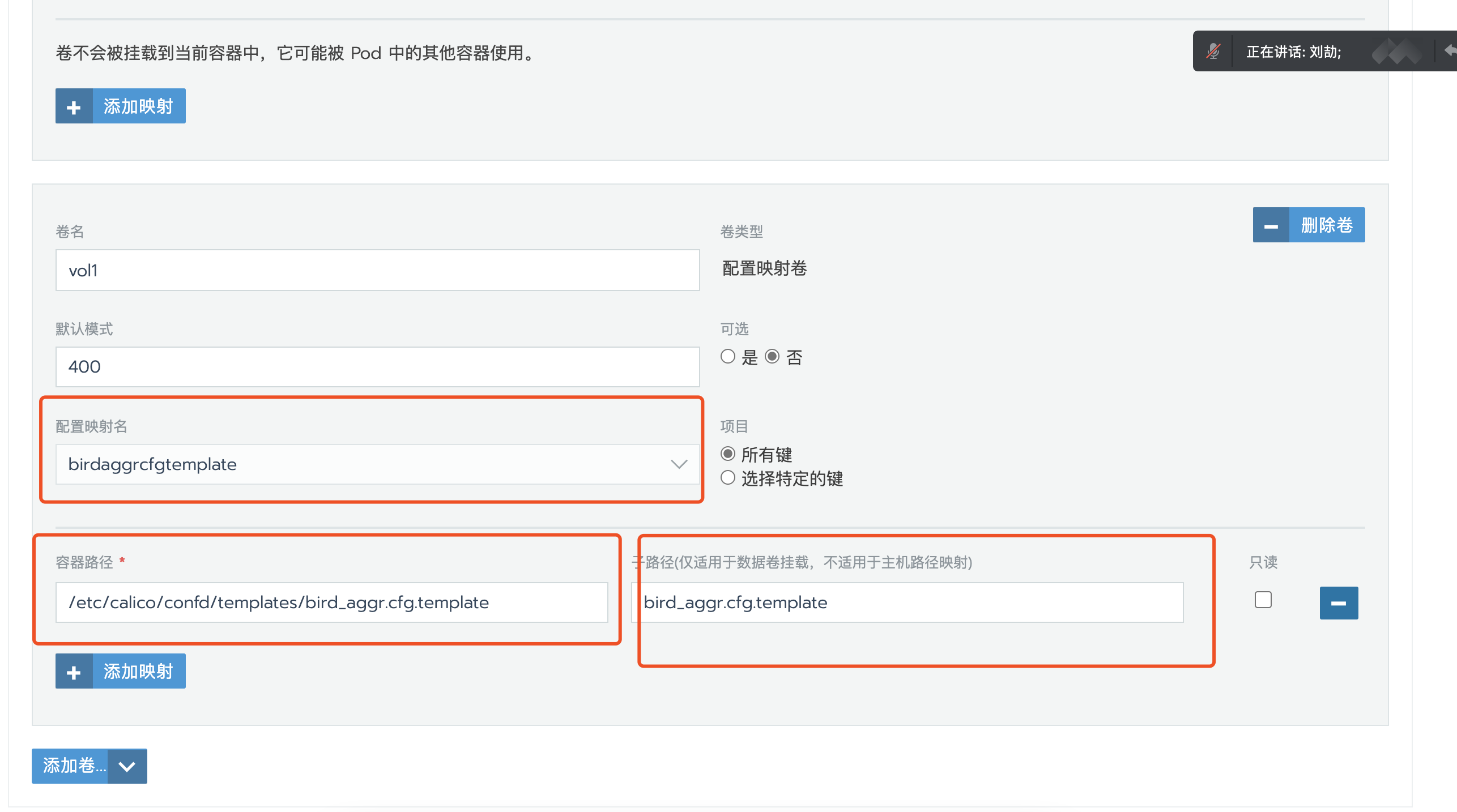

升级calico-node映射此configmap配置文件

重建calico-node

查看Bird节点

ip route

default via 192.168.2.1 dev eth0

10.41.0.0/16 via 192.168.0.40 dev eth1 proto bird

10.41.57.199 via 192.168.0.25 dev eth1 proto bird

10.41.57.203 via 192.168.0.25 dev eth1 proto bird

10.41.57.204 via 192.168.0.25 dev eth1 proto bird

10.41.113.193 via 192.168.0.7 dev eth1 proto bird

10.41.113.194 via 192.168.0.7 dev eth1 proto bird

10.41.113.195 via 192.168.0.7 dev eth1 proto bird

10.41.113.196 via 192.168.0.7 dev eth1 proto bird

10.41.113.198 via 192.168.0.7 dev eth1 proto bird

10.41.113.201 via 192.168.0.7 dev eth1 proto bird

10.41.113.202 via 192.168.0.7 dev eth1 proto bird

10.41.210.6 via 192.168.2.15 dev eth0 proto bird

10.41.210.7 via 192.168.2.15 dev eth0 proto bird

10.41.210.8 via 192.168.2.15 dev eth0 proto bird

10.41.210.9 via 192.168.2.15 dev eth0 proto bird

10.41.210.137 via 192.168.2.14 dev eth0 proto bird

10.41.210.138 via 192.168.2.14 dev eth0 proto bird

10.42.0.0/16 via 192.168.0.40 dev eth1 proto bird

已经学习到了每个pod的明细路由,这种方式会导致路由设备压力巨大,因为需要维护大量的路由条目,并且pod的每次删除和创建都会引发的路由条目更新。在实际生产中请谨慎评估后使用。

而实际业务在使用的过程中,会针对一个服务或者一个deployment分配一个IP Pool,这种使用模式会导致Calico的IP Pool没有办法按照Node聚合,出现一些零散的无法聚合的IP地址,最差的情况,会导致每个Pod产生一条路由,会导致路由的条目变为Pod级别。

在默认情况下,交换机设备为了防止路由震荡,会对BGP路由进行收敛保护。但是Kubernetes集群中,Pod生命周期短,变化频繁,需要关闭网络设备的路由变更保护机制才能满足Kubernetes的要求;对于不同的网络设备,路由收敛速度也是不同的,在大规模Pod扩容和迁移的场景,或者进行双数据中心切换,除了考虑Pod的调度时间、启动时间,还需要对网络设备的路由收敛速度进行性能评估和压测。

https://blog.51cto.com/u_14992974/2549877

Service-CIDR路由发布

为了使集群外部也可以通过Service的Cluster-ip访问到集群内部服务,可以将Service-cidr通过Calico-bgp进行发布。

calicoctl patch BGPConfig default --patch '{"spec": {"serviceClusterIPs": [{"cidr": "10.43.0.0/16"}]}}'

发布后在bird节点上可以看见多条10.43.0.0/16地址,因为采用ECMP(等价多路径)方式实现路由负载均衡。

ip route

10.43.0.0/16 proto bird

nexthop via 192.168.0.7 dev eth1 weight 1

nexthop via 192.168.0.25 dev eth1 weight 1

nexthop via 192.168.2.14 dev eth0 weight 1

nexthop via 192.168.2.15 dev eth0 weight 1

配置明细路由后发布后,Service-CIDR在BGP路由器中无法看见,可以通过修改bird_aggr.cfg.template文件

添加以下配置,$servicesubnet_split网段根据集群实际Service-CIDR进行修改

1 |

|

完整bird_aggr.cfg.template配置文件如下:

# Generated by confd

{{- $block_key := printf "/calico/ipam/v2/host/%s/ipv4/block" (getenv "NODENAME")}}

{{- $static_key := "/calico/staticroutes"}}

{{- $servicesubnet_split := split "10.43.0.0/16" " " }}

{{if or (ls $block_key) (ls $static_key)}}

protocol static {

{{- if ls $block_key}}

# IP blocks for this host.

{{- range ls $block_key}}

{{- $parts := split . "-"}}

{{- $cidr := join $parts "/"}}

# route {{$cidr}} blackhole;

{{- end}}

{{- end}}

{{- if ls $static_key}}

# Static routes.

{{- range ls $static_key}}

{{- $parts := split . "-"}}

{{- $cidr := join $parts "/"}}

# route {{$cidr}} blackhole;

{{- end}}

{{- end}}

# Service IP block

{{- if $servicesubnet_split}}

{{- range $servicesubnet_split}}

route {{.}} blackhole;

{{- end}}

{{- end}}

}

{{else}}# No IP blocks or static routes for this host.{{end}}

# Aggregation of routes on this host; export the block, nothing beneath it.

# Export the service block.

function accept_servicesubnet ()

{

{{- range $servicesubnet_split}}

if ( net = {{.}} ) then { accept; }

if ( net ~ {{.}} ) then { reject; }

{{- end}}

}

function deny_servicesubnet ()

{

{{- range $servicesubnet_split}}

if ( net = {{.}} ) then { reject; }

if ( net ~ {{.}} ) then { reject; }

{{- end}}

}

function calico_aggr ()

{

{{- range ls $block_key}}

{{- $parts := split . "-"}}

{{- $cidr := join $parts "/"}}

{{- $affinity := json (getv (printf "%s/%s" $block_key .))}}

{{- if $affinity.state}}

# Block {{$cidr}} is {{$affinity.state}}

{{- if eq $affinity.state "confirmed"}}

if ( net = {{$cidr}} ) then { accept; }

if ( net ~ {{$cidr}} ) then { accept; }

{{- end}}

{{- else }}

# Block {{$cidr}} is implicitly confirmed.

if ( net = {{$cidr}} ) then { accept; }

if ( net ~ {{$cidr}} ) then { accept; }

{{- end }}

{{- end}}

}