bpftrace是基于eBPF实现的动态的工具,使用DSL(Domain Specific Language)编写eBPF程序,使用LLVM编译eBPF字节码,BCC与LinuxBPF系统交互。直接使用DSL编写好的脚本(类似awk语言)可以执行,无需在内核中手动编译和加载。bpftrace在内核中实现动态追踪主要是使用Kprobe探针和Tracepoints探针。使用bpftrace可以更深入的进行操作系统上问题排查,如某个函数的调用次数和延时、追踪系统OOMKILL、TCP连接丢包等。都可以自定义脚本实现。

https://github.com/iovisor/bpftrace https://github.com/iovisor/bcc

系统环境:

参考官网安装方式https://github.com/iovisor/bpftrace/blob/master/INSTALL.md https://github.com/iovisor/bpftrace/blob/master/scripts/check_kernel_features.sh

1 2 3 ./check_kernel_features.sh All required features present!

安装bpftrace

1 2 sudo apt-get install -y bpftrace bpfcc-tools

安装完成后查看版本

1 2 3 bpftrace --version bpftrace v0.9.4

列出当前内核支持的Kprobes探针列表

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 bpftrace -l 'kprobe:tcp* kprobe:tcp_mmap kprobe:tcp_get_info_chrono_stats kprobe:tcp_init_sock kprobe:tcp_splice_data_recv kprobe:tcp_push kprobe:tcp_send_mss kprobe:tcp_cleanup_rbuf kprobe:tcp_set_rcvlowat kprobe:tcp_recv_timestamp kprobe:tcp_enter_memory_pressure kprobe:tcp_leave_memory_pressure kprobe:tcp_ioctl kprobe:tcp_get_info kprobe:tcp_get_md5sig_pool kprobe:tcp_set_state kprobe:tcp_shutdown ...

内核静态探针-Tracepoint

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 bpftrace -l 'tracepoint:*' kprobe:tcp_mmap kprobe:tcp_get_info_chrono_stats kprobe:tcp_init_sock kprobe:tcp_splice_data_recv kprobe:tcp_push kprobe:tcp_send_mss kprobe:tcp_cleanup_rbuf kprobe:tcp_set_rcvlowat kprobe:tcp_recv_timestamp kprobe:tcp_enter_memory_pressure kprobe:tcp_leave_memory_pressure kprobe:tcp_ioctl kprobe:tcp_get_info kprobe:tcp_get_md5sig_pool kprobe:tcp_set_state kprobe:tcp_shutdown tracepoint:nfsd:nfsd_compound tracepoint:nfsd:nfsd_compound_status tracepoint:nfsd:nfsd_read_start tracepoint:nfsd:nfsd_read_splice tracepoint:nfsd:nfsd_read_vector tracepoint:nfsd:nfsd_read_io_done tracepoint:nfsd:nfsd_read_done tracepoint:nfsd:nfsd_write_start tracepoint:nfsd:nfsd_write_opened tracepoint:nfsd:nfsd_write_io_done tracepoint:nfsd:nfsd_write_done tracepoint:nfsd:nfsd_read_err tracepoint:nfsd:nfsd_write_err tracepoint:nfsd:nfsd_layoutstate_alloc tracepoint:nfsd:nfsd_layoutstate_unhash ...

例如列出所有的进程打开的文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 bpftrace -e 'tracepoint:syscalls:sys_enter_openat { printf("%s %s\n", comm, str(args->filename)); }' kubelet /sys/fs/cgroup/memory/kubepods.slice/memory.numa_stat kubelet /sys/fs/cgroup/cpu,cpuacct/kubepods.slice/cpu.stat kubelet /sys/fs/cgroup/cpu,cpuacct/kubepods.slice/cpuacct.stat kubelet /sys/fs/cgroup/cpu,cpuacct/kubepods.slice/cpuacct.usage kubelet /sys/fs/cgroup/cpu,cpuacct/kubepods.slice/cpuacct.usage_percpu kubelet /sys/fs/cgroup/cpu,cpuacct/kubepods.slice/cpuacct.usage_all kubelet /sys/fs/cgroup/pids/kubepods.slice/pids.current kubelet /sys/fs/cgroup/pids/kubepods.slice/pids.max kubelet /sys/fs/cgroup/blkio/kubepods.slice/blkio.bfq.sectors_recursive kubelet /sys/fs/cgroup/blkio/kubepods.slice/blkio.bfq.io_serviced_recur kubelet /sys/fs/cgroup/blkio/kubepods.slice/blkio.sectors_recursive kubelet /sys/fs/cgroup/blkio/kubepods.slice/blkio.throttle.io_serviced_ ...

ctrl+c暂停

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 ls /usr/sbin/|grep ".*.bt" bashreadline.bt biolatency.bt biosnoop.bt biostacks.bt bitesize.bt capable.bt cpuwalk.bt dcsnoop.bt ebtables ebtables-nft ebtables-nft-restore ebtables-nft-save ebtables-restore ebtables-save execsnoop.bt gethostlatency.bt killsnoop.bt loads.bt mdflush.bt naptime.bt oomkill.bt opensnoop.bt pidpersec.bt runqlat.bt runqlen.bt setuids.bt statsnoop.bt swapin.bt syncsnoop.bt syscount.bt tcpaccept.bt tcpconnect.bt tcpdrop.bt tcplife.bt tcpretrans.bt tcpsynbl.bt threadsnoop.bt vfscount.bt vfsstat.bt writeback.bt xfsdist.bt

https://github.com/iovisor/bpftrace/tree/master/tools 也存在很多脚本和测试用例。

比如执行tcpconnect.bt 可以参考到本机所有的TCP网络连接

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 tcpconnect.bt Attaching 2 probes... Tracing tcp connections. Hit Ctrl-C to end. TIME PID COMM SADDR SPORT DADDR DPORT 23:02:09 1686607 coredns 127.0.0.1 42216 127.0.0.1 13429 23:02:10 1686607 coredns 127.0.0.1 42218 127.0.0.1 13429 23:02:11 1680193 kubelet 10.0.1.11 34732 10.0.1.15 13429 23:02:11 1680193 kubelet 10.0.1.11 41570 10.0.1.244 13429 23:02:11 1686607 coredns 127.0.0.1 42224 127.0.0.1 13429 23:02:11 1680193 kubelet 127.0.0.1 55010 127.0.0.1 13429 23:02:11 1680193 kubelet 10.0.1.11 33098 10.0.1.145 13429 23:02:12 1686607 coredns 127.0.0.1 42230 127.0.0.1 13429 23:02:13 1680193 kubelet 127.0.0.1 34214 127.0.0.1 13429 23:02:13 1686607 coredns 127.0.0.1 42234 127.0.0.1 13429

追踪全系统范围内open()调用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 opensnoop.bt Attaching 6 probes... Tracing open syscalls... Hit Ctrl-C to end. PID COMM FD ERR PATH 1686817 AsyncBlockInput 2 0 /var/lib/clickhouse_storage/store/087/0872950b-6ca9-420e-8872-9 1686817 AsyncBlockInput 2 0 /var/lib/clickhouse_storage/store/087/0872950b-6ca9-420e-8872-9 1686817 AsyncBlockInput 2 0 /var/lib/clickhouse_storage/store/435/435debae-6288-4661-835d-e 1686817 AsyncBlockInput 2 0 /var/lib/clickhouse_storage/store/435/435debae-6288-4661-835d-e 1686817 AsyncBlockInput 2 0 /var/lib/clickhouse_storage/store/092/09246824-dd69-4f4c-8924-6 1686817 AsyncBlockInput 2 0 /var/lib/clickhouse_storage/store/092/09246824-dd69-4f4c-8924-6 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/ 1680193 kubelet 2 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-besteffort.slice/

展示最消耗IO的进程及数据写入量

1 2 3 4 5 6 7 8 9 10 11 biotop-bpfcc 20:53:20 loadavg: 0.96 1.40 1.26 2/2392 3295655 PID COMM D MAJ MIN DISK I/O Kbytes AVGms 337 jbd2/vda1-8 W 252 0 vda 2 412.0 5.16 1680139 etcd W 252 0 vda 14 60.0 2.61 3295622 rancher-system- R 252 0 vda 1 56.0 2.07

查看每个进程对应的执行命令和参数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 execsnoop-bpfcc -T TIME PCOMM PID PPID RET ARGS 21:22:05 rancher-system- 3310507 1 0 /usr/local/bin/rancher-system-agent sentinel 21:22:06 cilium-cni 3310516 1680193 0 /opt/cni/bin/cilium-cni 21:22:06 iptables 3310525 1680164 0 /usr/sbin/iptables -w 5 -W 100000 -S KUBE-PROXY-CANARY -t mangle 21:22:06 ip6tables 3310524 1680164 0 /usr/sbin/ip6tables -w 5 -W 100000 -S KUBE-PROXY-CANARY -t mangle 21:22:06 nsenter 3310526 1680193 0 /usr/bin/nsenter --net=/proc/1688099/ns/net -F -- ip -o -4 addr show dev eth0 scope global 21:22:06 ip 3310526 1680193 0 /usr/sbin/ip -o -4 addr show dev eth0 scope global 21:22:06 nsenter 3310527 1680193 0 /usr/bin/nsenter --net=/proc/1688099/ns/net -F -- ip -o -6 addr show dev eth0 scope global 21:22:06 ip 3310527 1680193 0 /usr/sbin/ip -o -6 addr show dev eth0 scope global 21:22:06 runc 3310528 1679752 0 /usr/bin/runc --version 21:22:06 docker-init 3310534 1679752 0 /usr/bin/docker-init --version

常用的一些脚本作用

killsnoop.bt——追踪 kill() 系统调用发出的信号

tcpconnect.bt——追踪所有的 TCP 网络连接

pidpersec.bt——统计每秒钟(通过fork)创建的新进程

opensnoop.bt——追踪 open() 系统调用

bfsstat.bt——追踪一些 VFS 调用,按秒统计

bashreadline.bt——打印从所有运行shell输入的bash命令

tcplife.bt——追踪TCP连接生命周期

biotop-bpfcc——展示进程io写入

内核态

总结

Perf(Performance Event)是在Linux Kernel2.6集成在Linux Kernel中,主要利用CPU中PMU (Performance Monitoring Unit)和Linux中的 tracepoint实现目标取样和性能分析。Perf工具根eBPF实际上没什么关系,这里写这个工具主要是因为它本身也可以实现应用程序动态追踪,也利用到了tracepoint的能力,但与eBPF不同的是Perf是写死的能力,bpftrace基于eBPF是可以实现脚本灵活的穿插和调用。

安装部署

1 2 3 sudo apt-get install linux-tools-common linux-tools-"``(uname -r)" linux-cloud-tools-"``(uname -r)" linux-tools-generic linux-cloud-tools-generic

验证版本

1 2 3 perf -v perf version 5.4.195

采样事件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 perf list List of pre-defined events (to be used in -e): alignment-faults [Software event] bpf-output [Software event] context-switches OR cs [Software event] cpu-clock [Software event] cpu-migrations OR migrations [Software event] dummy [Software event] emulation-faults [Software event] major-faults [Software event] minor-faults [Software event] page-faults OR faults [Software event] task-clock [Software event] duration_time [Tool event] msr/tsc/ [Kernel PMU event] rNNN [Raw hardware event descriptor] cpu/t1=v1[,t2=v2,t3 ...]/modifier [Raw hardware event descriptor] (see 'man perf-list' on how to encode it) mem:<addr>[/len][:access] [Hardware breakpoint] alarmtimer:alarmtimer_cancel [Tracepoint event] alarmtimer:alarmtimer_fired [Tracepoint event] ...

主要分为三类:

perf top展示各个进程和函数资源占用情况,-g显示子进程,按e显示子进程函数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 perf top -g Samples: 284K of event 'cpu-clock:pppH', 4000 Hz, Event count (approx.): 33836570425 lost: 0/0 drop: 0/0 Children Self Shared Object Symbol - 20.27% 0.09% perf [.] __ordered_events__flush.part.0 ◆ - 2.20% __ordered_events__flush.part.0 ▒ - 2.56% deliver_event ▒ - 3.39% hist_entry_iter__add ▒ - 3.79% iter_add_next_cumulative_entry ▒ - 3.03% __hists__add_entry.constprop.0 ▒ 3.79% hists__findnew_entry ▒ - 1.54% callchain_append ▒ - 2.64% append_chain_children ▒ - 2.22% append_chain_children ▒ - 1.73% append_chain_children ▒ - 1.34% append_chain_children ▒ 1.07% append_chain_children ▒ + 20.13% 0.18% perf [.] deliver_event ▒ + 18.62% 0.04% perf [.] hist_entry_iter__add ▒ + 14.47% 0.80% perf [.] iter_add_next_cumulative_entry ▒ + 12.05% 0.96% [kernel] [k] do_syscall_64 ▒ + 8.99% 0.00% perf [.] process_thread ▒ + 8.93% 0.22% [kernel] [k] do_idle ▒ + 8.83% 1.06% [kernel] [k] __softirqentry_text_start ▒ + 8.24% 6.28% perf [.] append_chain_children ▒ + 7.11% 0.06% perf [.] callchain_append ▒ + 5.96% 4.75% libc-2.31.so [.] pthread_attr_setschedparam ▒ + 5.74% 0.25% perf [.] __hists__add_entry.constprop.0

[.]:表示运行在用户态空间

perf state查看程序运行情况

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 perf stat -p 1679752 按ctrl+c输出结果 Performance counter stats for process id '1679752': 682.90 msec task-clock # 0.066 CPUs utilized 3154 context-switches # 0.005 M/sec 36 cpu-migrations # 0.053 K/sec 3275 page-faults # 0.005 M/sec <not supported> cycles <not supported> instructions <not supported> branches <not supported> branch-misses

Task-clock:CPU 利用率

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 Samples: 1K of event 'block:block_rq_issue', 1 Hz, Event count (approx.): 136 lost: 0/0 drop: 0/0 Children Self Trace output + 16.91% 16.91% 252,0 FF 0 () 0 + 0 [kworker/0:1H] + 15.44% 15.44% 252,0 FF 0 () 0 + 0 [kworker/7:1H] + 9.56% 9.56% 252,0 FF 0 () 0 + 0 [kworker/3:1H] + 8.09% 8.09% 252,0 FF 0 () 0 + 0 [kworker/4:1H] + 6.62% 6.62% 252,0 WS 4096 () 18340064 + 8 [etcd] + 6.62% 6.62% 252,0 WS 4096 () 18340072 + 8 [etcd] + 5.15% 5.15% 252,0 FF 0 () 0 + 0 [kworker/2:1H] + 4.41% 4.41% 252,0 FF 0 () 0 + 0 [kworker/6:1H] + 2.94% 2.94% 252,0 WS 4096 () 122005280 + 8 [etcd] 2.21% 2.21% 252,0 WS 4096 () 115952144 + 8 [etcd] 2.21% 2.21% 252,0 WS 4096 () 122005272 + 8 [etcd] + 1.47% 1.47% 252,0 FF 0 () 0 + 0 [kworker/1:1H] + 1.47% 1.47% 252,0 WS 4096 () 116164552 + 8 [etcd] 1.47% 1.47% 252,0 WS 4096 () 116173824 + 8 [etcd] + 1.47% 1.47% 252,0 WS 4096 () 122005256 + 8 [etcd] 1.47% 1.47% 252,0 WS 4096 () 122005288 + 8 [etcd] 1.47% 1.47% 252,0 WS 4096 () 122005296 + 8 [etcd] 0.74% 0.74% 252,0 FF 0 () 0 + 0 [kworker/5:1H] 0.74% 0.74% 252,0 WS 516096 () 2388520 + 1008 [jbd2/vda1-8] 0.74% 0.74% 252,0 WS 372736 () 2389528 + 728 [jbd2/vda1-8] 0.74% 0.74% 252,0 WS 4096 () 115700160 + 8 [etcd] 0.74% 0.74% 252,0 WS 4096 () 115948608 + 8 [etcd]

对CPU事件进行检测,采样时间60s,每秒采样99个事件,采样完成后会在本地生成个perf.data文件,如果执行多次,会将上一个重命名为perf.data.old。加-p可以指定进程号输出。

1 2 perf record -F 99 -a -g -- sleep 60

查看报告

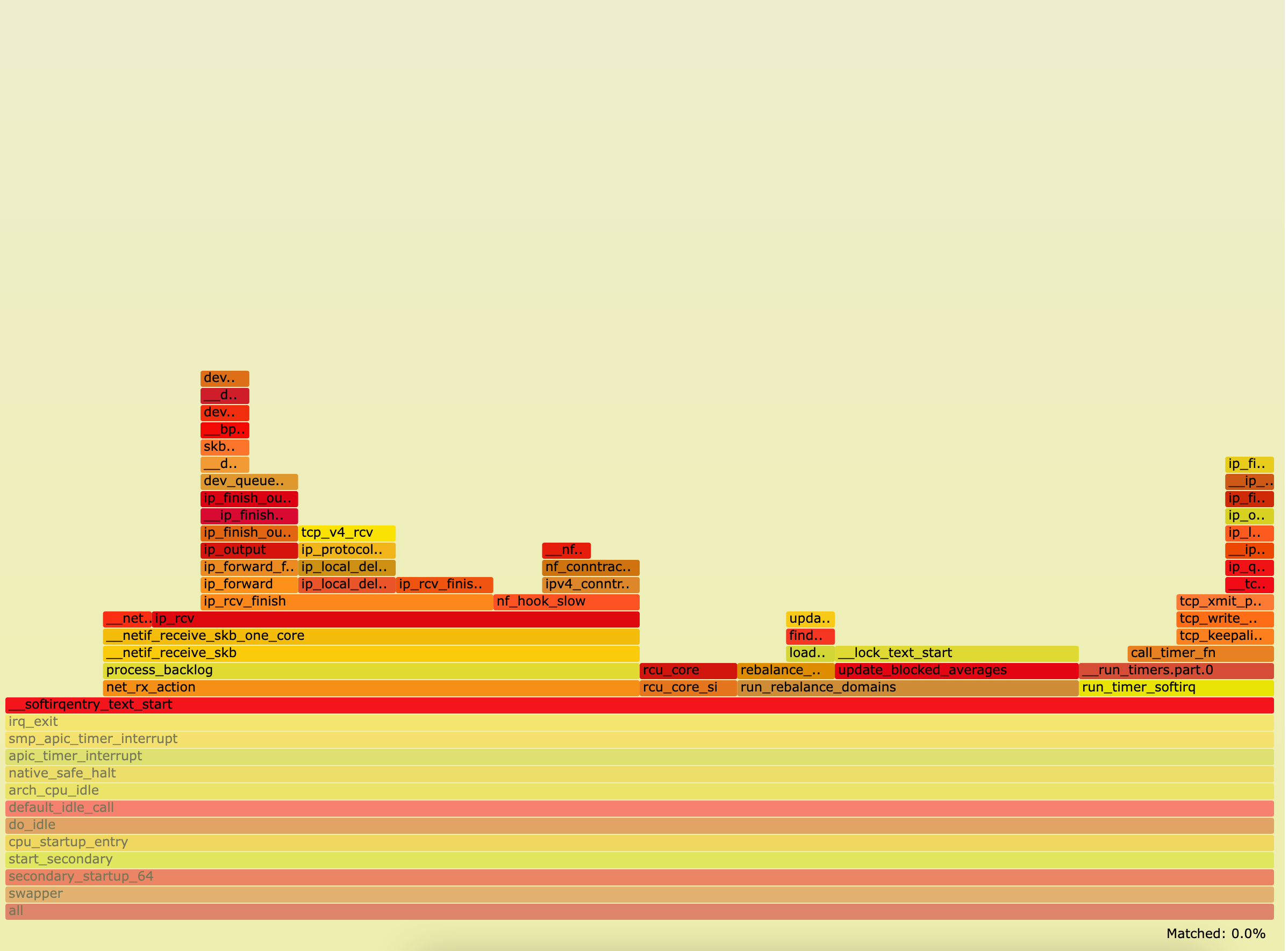

生成火焰图

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 下载制作火焰图工具 git clone https://github.com/brendangregg/FlameGraph.git 对perf.data进行解析 perf script -i perf.data &> perf.unfold 进行符号处理 FlameGraph/stackcollapse-perf.pl perf.unfold &> perf.folded 生成火焰图 FlameGraph/flamegraph.pl perf.folded > perf.svg

使用chrome浏览器打开

火焰图怎么查看分析可参考https://www.infoq.cn/article/a8kmnxdhbwmzxzsytlga

参考链接https://github.com/iovisor/bpftrace/blob/master/docs/reference_guide.md http://blog.nsfocus.net/bpftrace-dynamic-tracing-0828/ https://www.cnblogs.com/arnoldlu/p/6241297.html https://access.redhat.com/documentation/zh-cn/red_hat_enterprise_linux/8/html/monitoring_and_managing_system_status_and_performance/counting-events-during-process-execution-with-perf-stat_monitoring-and-managing-system-status-and-performance